As noted in the previous Pulumi post, I had bit too much to write about when describing my current home infrastructure. Due to that, here’s stand-alone post about just that - Pulumi (and pyinfra) at home.

Current hobby architecture

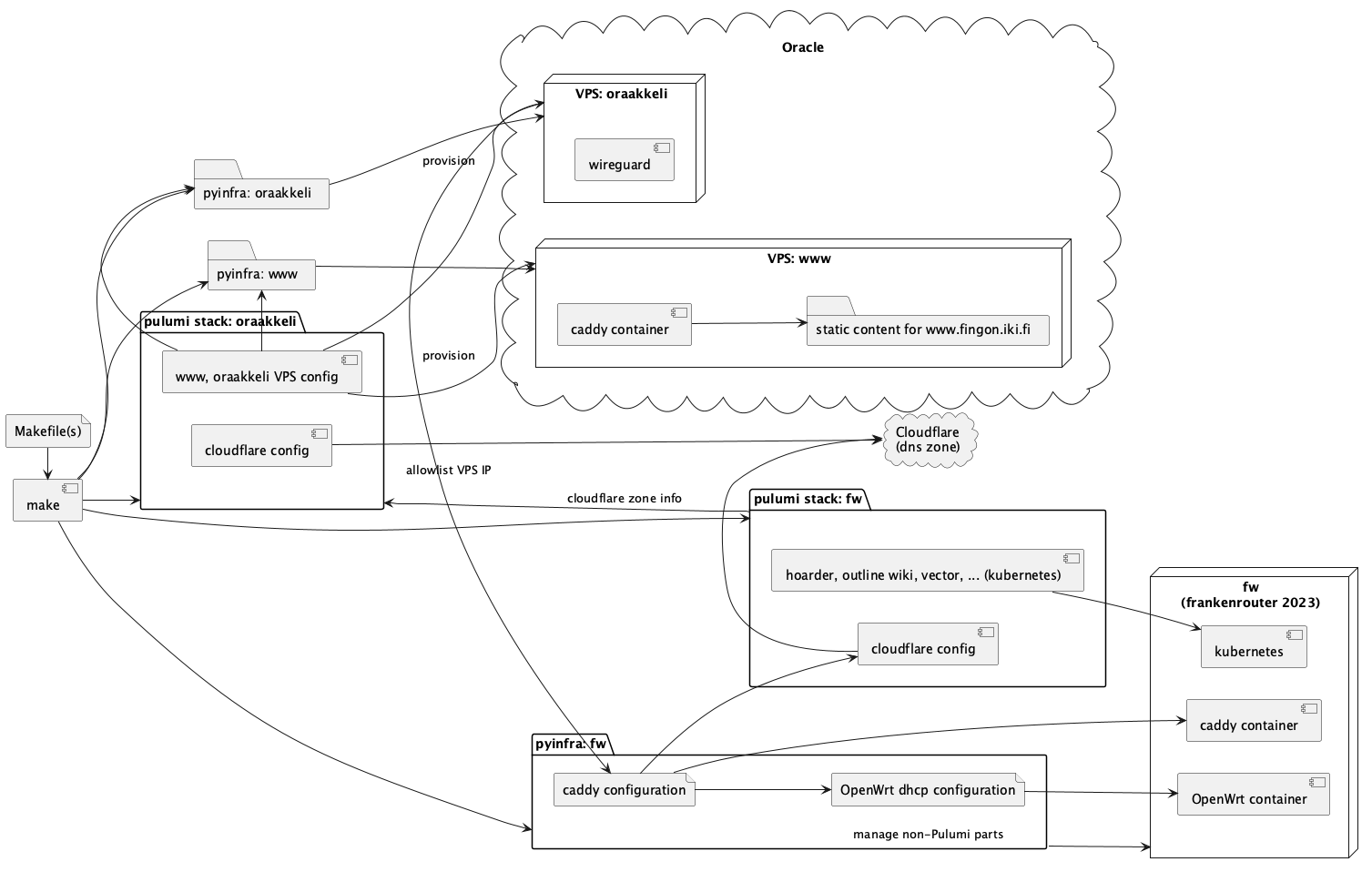

To give a concrete example of how I am using Pulumi in my current hobby infrastructure, this is a simplified version of my hobby IaC architecture. There is a lot of containers both within and without Kubernetes that I am omitting for clarity from the diagram:

fw pyinfra/Pulumi provisioning configures local infrastructure, and oraakkeli Pulumi stack (and two pyinfra configurations) handle my VPSes in Oracle Cloud.

The above infrastructure is perhaps somewhat noteworthy only in a sense that it is relatively densely interconnected, yet still fully IaC - I can fire up things back to the state they were (+- restoring backups for data) quite rapidly.

Neat hack #1 - how does information flow when I add or remove services?

One noteworthy interaction is how I handle DNS entries for the set of services which changes quite often at home. The root source of information about what I want publicly visible is defined in Caddys configuration file Caddyfile. The static IP of oraakkeli VPS ( from the oraakkeli Pulumi stack output) is injected to some of the rules, which are allowed only from it or from the home network.

Once Caddyfile has been rewritten, following happens:

- OpenWrt

/etc/config/dhcpfragments which map the DNS entries to local private IP are updated in the OpenWrt container (so in-home network access actually uses private IP, and not the publicly published IPv4 or IPv6 address) - Pulumi

fwstack maintains Cloudflare DNS zone automatically (both removing and adding entries) - Gatus configuration is produced to ensure that all the internal endpoints (the ones Caddyfile points at) actually work

- This has been quite valuable approach, as getting Matrix notification about port being inaccessible has been frequently quite fast way to detect that some upgrade has not been quite flawless for some particular containerised service

While the ‘trendy’ choice would be just to have Traefik proxy in Kubernetes cluster to handle the dynamic reverse proxying, in practise a lot of the integrations describe above add quite a lot of value and it needs just some Go (Pulumi) code and Python code (pyinfra, hacky script or two).

So, why do I deal with the IP whitelisting in Caddy at all? It is my cheap and cheerful way of having limited access to my home. I do not want to actually even terminate Wireguard at home, but instead just provide access to few things in Caddy reverse proxy that I do not want to even expose with authentication elsewhere (e.g. Hoarder API access - the API use prevents use of authenticating proxy with it, which is what I do with many other services that I expose more widely with mandatory Authelia authentication requirement). I suppose I could also do same for ssh port, but I have yet to have the need, and not having a privileged remote access means it cannot be exploited by anyone either given security vulnerability/vulnerabilities somewhere along the way.

Of course, all of the exposed containers I am running are still vulnerable to some potential exposure, but most of them are gated via Caddy and Authelia and unless they have some catastrophic vulnerability it is probably ‘fine’.

So far there hasn’t been significant CVEs for them:

- Caddyserver Caddy security vulnerabilities, CVEs, versions and CVE reports

- Nothing

- Authelia Authelia versions and number of CVEs, vulnerabilities

- There were two problems 3+ years ago - one with specific authentication backend I do not use, and another just redirection attack

Neat hack #2: Avoid docker-compose and Helm charts

I am not DSL fan (as described in the earlier post), nor docker-compose fan (for various reasons I might expand on later). A lot of open-source software is packaged as docker-compose files, or as Helm charts. Pulumi provides an answer to both of these:

- It can use Helm charts (without actually doing the Helm state in Kubernetes itself) with the v4 plugin

- It can use docker-compose definitions IF you convert them to Helm charts first using Kompose.

There have been some open source projects that I have wanted to self-host (e.g. Outline, Hoarder) with multiple containers that are awkward to set up standalone, with bit better lifecycle than docker-compose, and combination of Makefile which converts docker-compose to helm chart, and then some applied helm chart values populated in Pulumi and then the chart applied to Kubernetes cluster has been quite painless way to get those projects up and running in my environment.

Some of the docker-compose definitions are not supported by Kompose, but otherwise it has been pretty smooth sailing. One oddity I noticed is that I have to specify ports each container has explicitly, or it has not been usable even within the same namespace.

Some thoughts about current IaC at home

Combination of Python and Go is bit weird, but I have yet to see pyinfra-grade configuration management tool which would use Go natively. Perhaps I will write one someday if one does not show up on its own before that. Another option would be to extend Pulumi to do in-node configuration management, but without the equivalent ecosystem of Terraform plugins to back it up, it would be probably not that great fit.